Why AI Confidence Is Mistaken for Intelligence

AI confidence feels like intelligence — but it isn’t. This investigation explains why fluent certainty is a design artefact, not understanding.

AI confidence feels like intelligence — but it isn’t. This investigation explains why fluent certainty is a design artefact, not understanding.

Live data access lets AI retrieve information — not learn from it. Here’s why those are fundamentally different processes.

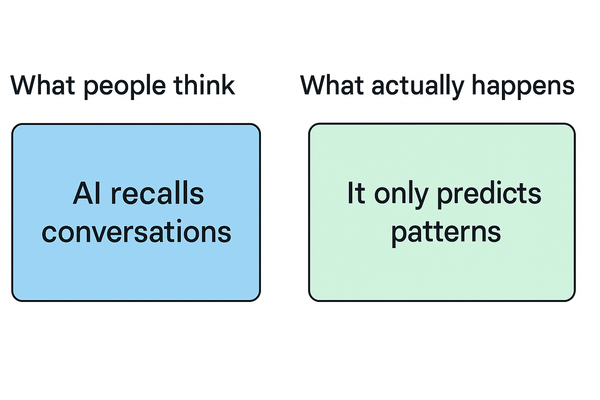

A common claim suggests AI models secretly store everything users type and can recall it later. There is no evidence this is how modern language models work.

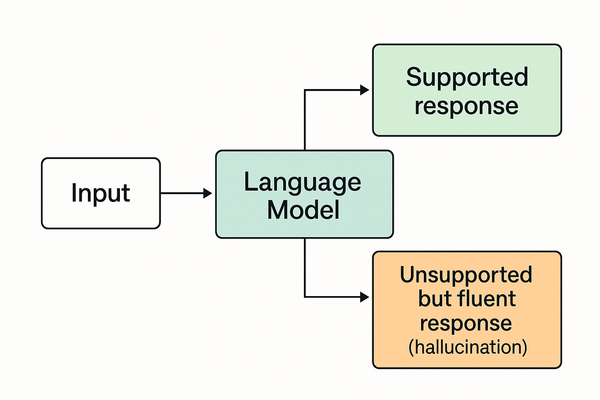

What AI “hallucinations” actually are — and what they aren’t. This explanation clarifies why models confidently produce false outputs, how prediction differs from knowledge, and why hallucinations are a structural limitation rather than a bug or deception.

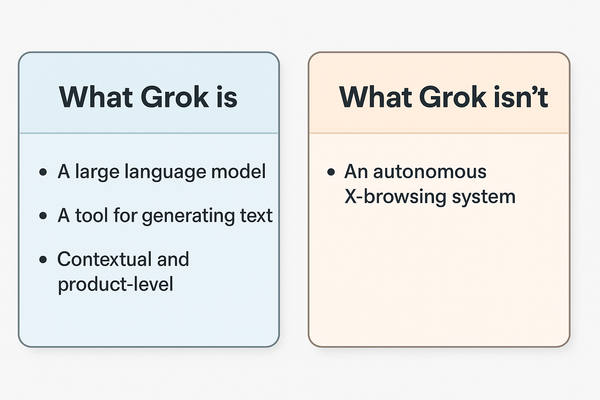

This explanation separates Grok’s technical reality from the claims surrounding it, clarifying how the system works, why it feels different, and where common misconceptions arise.

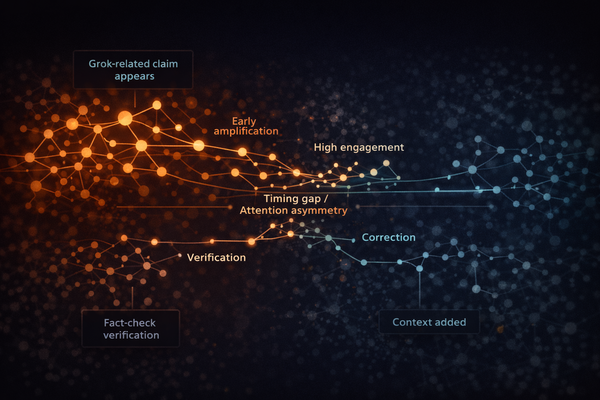

This investigation examines the mechanics, amplification patterns, and platform dynamics that allow claims about Grok to outpace factual verification.

A widely shared claim suggests Grok will run fully on-device inside Tesla vehicles. There is no evidence this is technically feasible or planned.

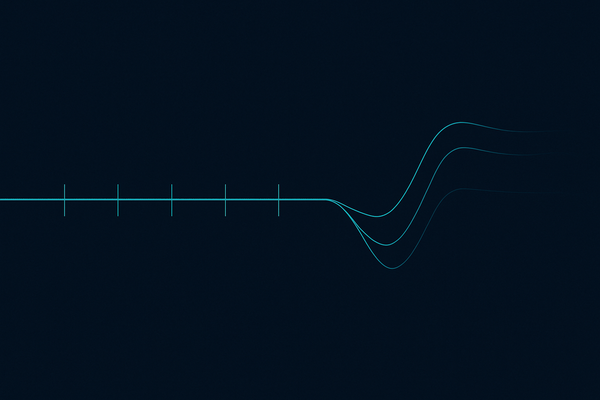

An Investigation into how AI release dates are invented, amplified, and recycled across forums, influencers, and YouTube channels — and why these timelines are almost always wrong.

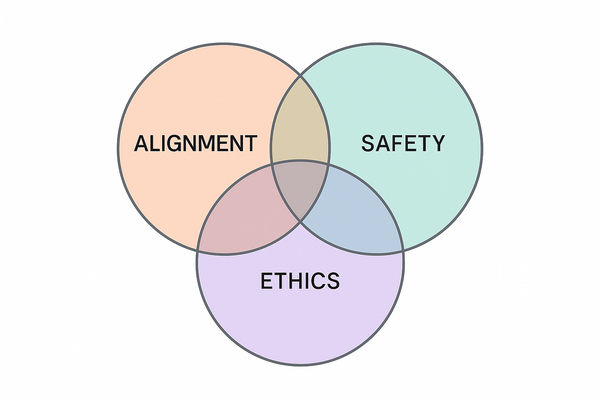

A clear explanation of what AI “alignment” really means, how it works, and why it is often misunderstood as a guarantee of safety or moral behaviour.

A circulating rumour claims GPT-5 has shown “near-human reasoning,” prompting OpenAI to accelerate its timeline. There is no evidence supporting these claims.

YouTube’s AI “explainer” channels have become a major source of fabricated AI news. This Investigation reveals how these channels turn rumours into viral ‘breaking stories’ using stock footage, AI voices, and zero evidence.

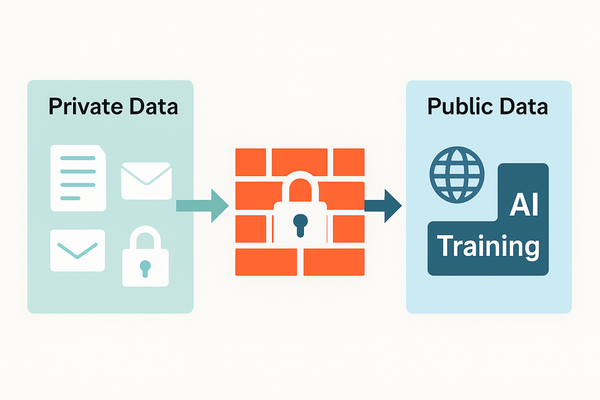

A clear breakdown of why claims about OpenAI training on private emails and messages don’t hold up — and why this rumour keeps returning.

Investigations

An investigation into what AI companies actually train on, what they don’t, and why the rumour of “they used my private data” continues to spread.

Explanations

This explanation clarifies what model memory is, what it is not, and why the confusion persists.

Investigations

A timeline investigation into how “sparks of AGI” has been used as strategic rhetoric by major AI companies.

Explanations

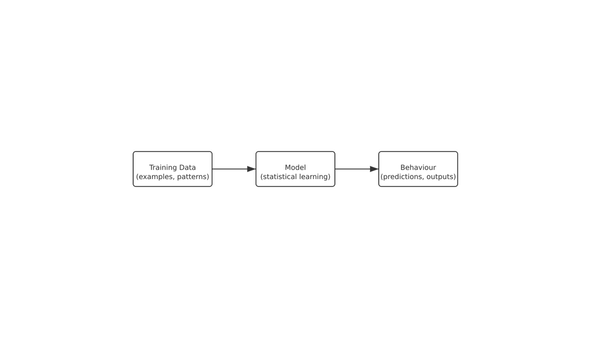

A clear explanation of what training data is, how AI models learn from it, and why misunderstandings about data lead to exaggerated claims about capability.

Investigations

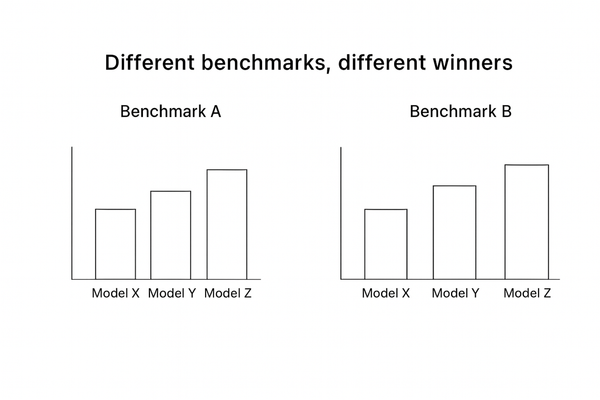

An investigation into how AI companies use benchmarks to shape perception, influence investors, and manage competitive narratives.

Explanations

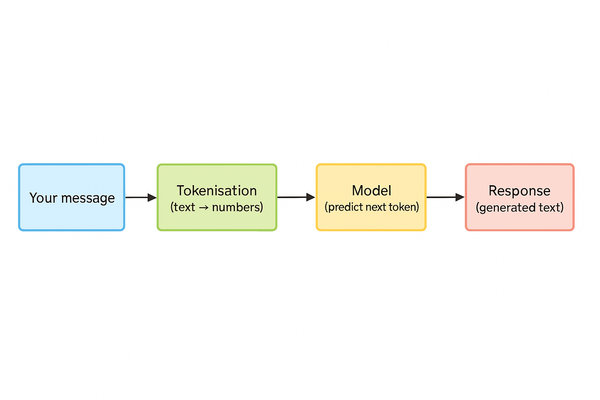

A clear explanation of how chatbots work behind the scenes by converting text into tokens and predicting the most likely next output.

Explanations

A clear explanation of what an AI model actually is, how it works, and what it does and does not understand.

Rumours

Investigating the rumour that a prompt engineer reported Gemini Ultra 1.5 behaving in a way described as “conscious.”

Rumours

Assessing the rumour that the EU is developing a covert “shadow-GPT” to monitor and police AI models across the bloc.

Rumours

Evaluating the claim that IBM is experimenting with a quantum-enhanced LLM capable of predicting market movements.

Rumours

Investigating claims that IBM has an unreleased model allegedly capable of predicting stock movements with high accuracy.

Rumours

Assessing the rumour that DeepSeek’s next model will be able to solve NP-complete problems in real time.