Why AI Confidence Is Mistaken for Intelligence

AI confidence feels like intelligence — but it isn’t. This investigation explains why fluent certainty is a design artefact, not understanding.

AI confidence feels like intelligence — but it isn’t. This investigation explains why fluent certainty is a design artefact, not understanding.

Live data access lets AI retrieve information — not learn from it. Here’s why those are fundamentally different processes.

A common claim suggests AI models secretly store everything users type and can recall it later. There is no evidence this is how modern language models work.

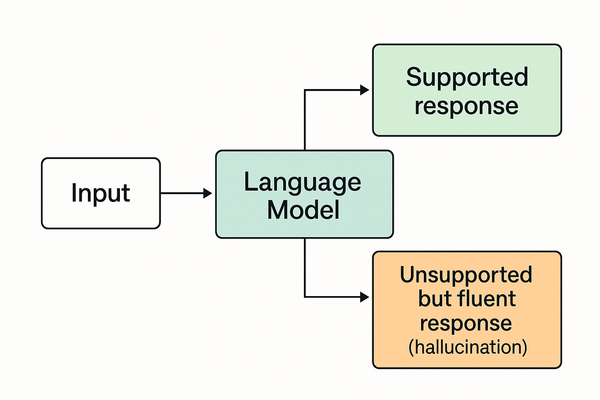

What AI “hallucinations” actually are — and what they aren’t. This explanation clarifies why models confidently produce false outputs, how prediction differs from knowledge, and why hallucinations are a structural limitation rather than a bug or deception.

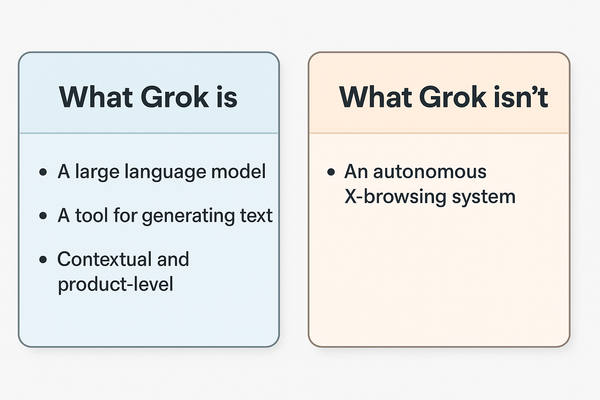

This explanation separates Grok’s technical reality from the claims surrounding it, clarifying how the system works, why it feels different, and where common misconceptions arise.

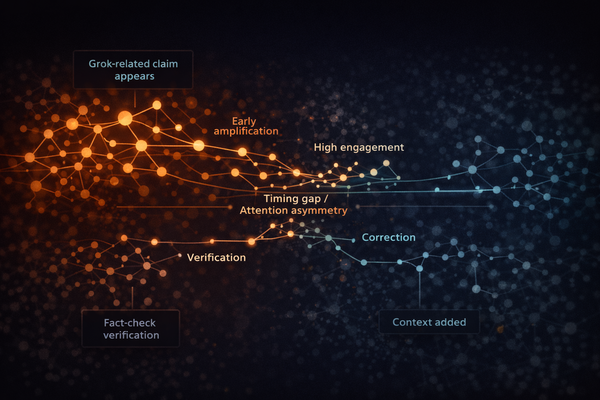

This investigation examines the mechanics, amplification patterns, and platform dynamics that allow claims about Grok to outpace factual verification.

A widely shared claim suggests Grok will run fully on-device inside Tesla vehicles. There is no evidence this is technically feasible or planned.

An Investigation into how AI release dates are invented, amplified, and recycled across forums, influencers, and YouTube channels — and why these timelines are almost always wrong.

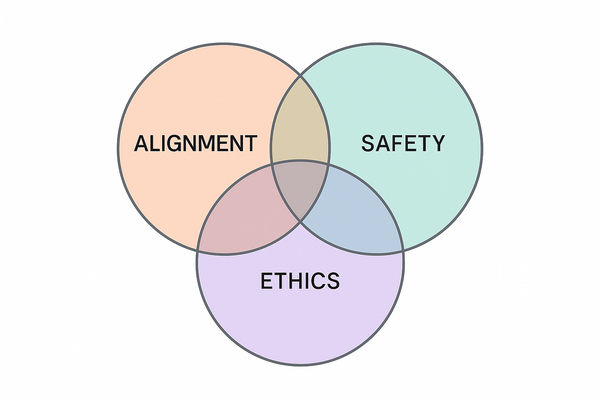

A clear explanation of what AI “alignment” really means, how it works, and why it is often misunderstood as a guarantee of safety or moral behaviour.

A circulating rumour claims GPT-5 has shown “near-human reasoning,” prompting OpenAI to accelerate its timeline. There is no evidence supporting these claims.

YouTube’s AI “explainer” channels have become a major source of fabricated AI news. This Investigation reveals how these channels turn rumours into viral ‘breaking stories’ using stock footage, AI voices, and zero evidence.

A clear breakdown of why claims about OpenAI training on private emails and messages don’t hold up — and why this rumour keeps returning.