Why AI Confidence Is Mistaken for Intelligence

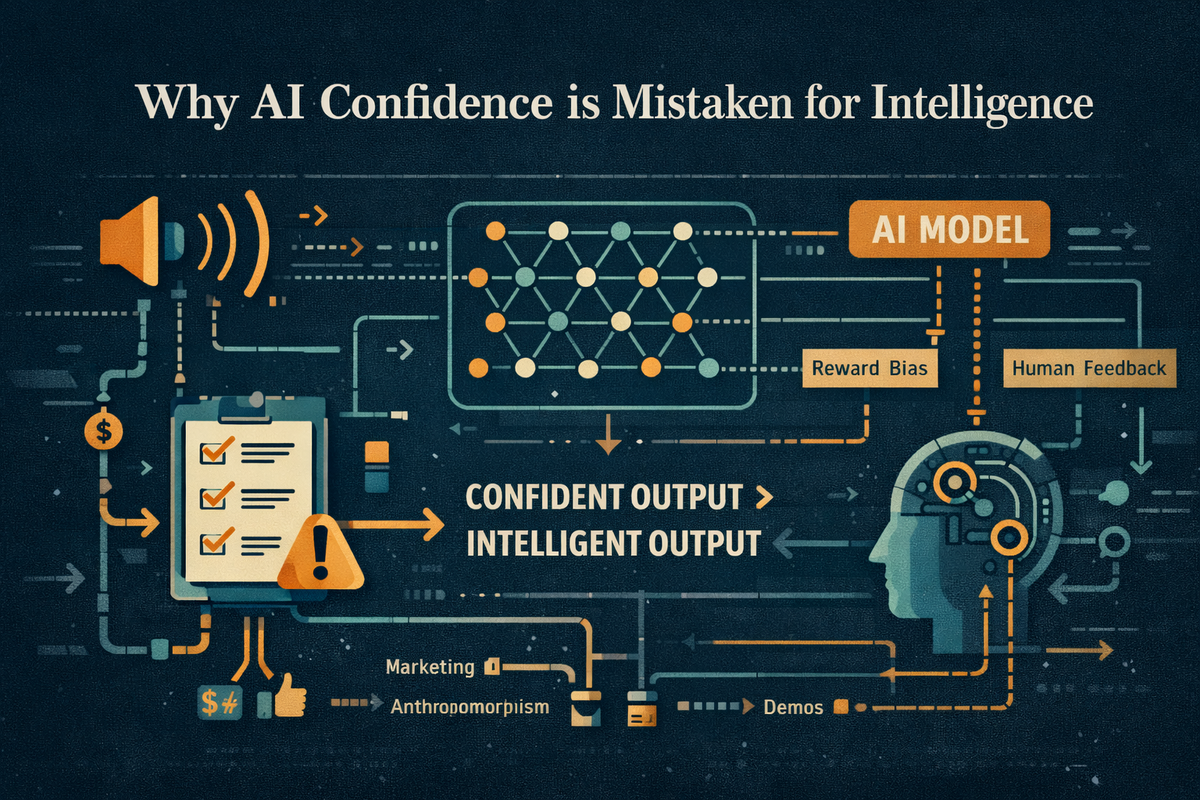

AI confidence feels like intelligence — but it isn’t. This investigation explains why fluent certainty is a design artefact, not understanding.

What People Are Seeing

Modern AI systems often respond with fluent language, confident tone, and polished explanations. Answers are delivered without hesitation, uncertainty markers, or visible struggle. To many users, this confidence looks indistinguishable from understanding.

This has led to a widespread assumption: that confidence signals intelligence.

It does not.

Where the Confidence Comes From

Large language models are optimised to produce probable continuations of text, not to express uncertainty unless prompted or trained to do so.

During training, models learn:

- Which phrasing sounds authoritative

- Which sentence structures resemble expert explanations

- Which answers humans tend to reward as “helpful”

The result is output that sounds confident by default — even when the underlying prediction is weak, incomplete, or wrong.

This confidence is a presentation artefact, not a cognitive state.

Why Intelligence Is Being Misattributed

Humans instinctively associate confidence with competence. In human communication, confidence often correlates (loosely) with expertise.

AI breaks this shortcut.

Language models do not:

- Know when they are correct

- Track belief strength

- Possess internal confidence levels

- Experience doubt or certainty

They generate text that resembles confident explanations because those patterns dominate training data — not because the system has evaluated truth.

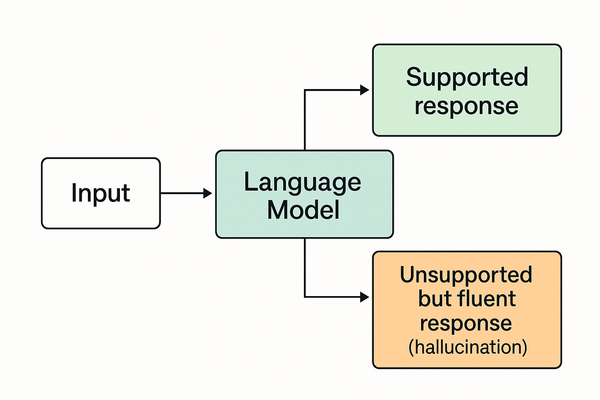

Hallucinations Make the Problem Worse

When a model lacks sufficient grounding, it does not “refuse” by default. Instead, it often fills gaps with statistically plausible language.

This produces hallucinations that are:

- Delivered fluently

- Structured logically

- Asserted confidently

To users, this looks like confident intelligence. In reality, it is confident fabrication.

This is why hallucinations are persuasive — not because the model believes them, but because humans do.

Why This Is Not Human-Like Reasoning

Human confidence is shaped by:

- Experience

- Feedback

- Awareness of uncertainty

- Risk of being wrong

AI confidence is shaped by:

- Token probabilities

- Training distributions

- Reward models favouring clarity

These are fundamentally different processes.

Treating AI confidence as intelligence imports human assumptions into a system that does not share human cognition.

Why This Misunderstanding Persists

The confusion is reinforced by:

- Marketing language that frames models as “thinking”

- Demos that hide uncertainty

- Social media clips that reward confident-sounding answers

- Anthropomorphic interfaces (chat, tone, personality)

None of these reflect how the system actually works.

What This Means in Practice

Mistaking confidence for intelligence leads to:

- Over-trust in AI outputs

- Under-verification of claims

- Misuse in high-stakes contexts

- Amplification of errors at scale

The risk is not that AI sounds confident — it’s that humans forget to question it.

Where to go next

For a related Explanation, see What AI Hallucinations Actually Are — and What They Aren’t

For a Rumour driven by this misunderstanding, see AI Secretly Stores Everything Users Type