What is an AI model anyway?

A clear explanation of what an AI model actually is, how it works, and what it does and does not understand.

Artificial intelligence attracts an extraordinary amount of marketing gloss, jargon, and confident speculation. Much of it obscures a simple question:

What, exactly, is an AI model?

Here is the answer — without mystique, mythology, or hype.

An AI model is a mathematical system that maps input to output

At its core, an AI model is nothing more than a vast collection of numbers arranged in a specific structure. Those numbers determine how the model transforms one thing into another:

- You type text → it predicts the next word

- You show it an image → it identifies what’s in it

- You give it audio → it turns it into text

There is no personality, agency, consciousness, intention, or inner life.

There is only statistical mapping.

Large language models (LLMs) such as GPT, Claude, Gemini, and Llama are essentially huge probability machines. They compute the most likely continuation of a sequence based on patterns they absorbed from enormous amounts of data.

Training: where the useful behaviour comes from

AI models do not start with built-in knowledge. They begin as randomly initialised networks — essentially mathematical nonsense.

Training turns that nonsense into competence.

Training involves:

- Feeding the model large numbers of examples

- Having it make predictions

- Checking how wrong those predictions are

- Adjusting the internal numbers accordingly

Repeat this loop billions or trillions of times and the system becomes increasingly capable.

Not because it “understands” anything, but because it has become an extremely powerful pattern recogniser.

Parameters: the model’s adjustable values

When you hear terms like 70 billion parameters or 1 trillion parameters, these refer to the number of adjustable values inside the model.

More parameters can allow a model to:

- Capture more subtle and complex patterns

- Produce more coherent and contextually appropriate text

- Store more representational capacity

- Perform more sophisticated reasoning-like behaviours

But size alone does not guarantee quality. Very large models require immense compute and still depend heavily on data quality, training methodology, and fine-tuning.

Models don’t think — they approximate

A persistent misconception is that AI models “reason” like humans. They don’t.

They mimic reasoning by reproducing patterns of language, logic, and behaviour seen in their training data. From the outside, this often looks like reasoning. In practical use, it can function like reasoning.

But internally, it is still numerical optimisation, not thought.

When a model “knows” that Paris is the capital of France, it is not recalling a fact. It is following statistical patterns that strongly favour the word Paris appearing after the phrase capital of France.

Nothing more.

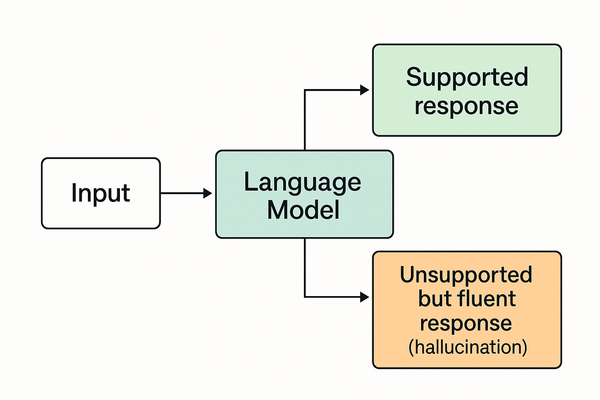

Why models hallucinate

Models do not know truth. They only know likelihood.

When they have strong patterns to draw from, they perform well.

When they don’t, they guess — confidently, and sometimes spectacularly.

Hallucinations are not a bug.

They are a natural outcome of prediction systems that generate the “most plausible answer” without access to ground truth.

So what is an AI model?

In one sentence:

An AI model is a massive, trained mathematical system that predicts the most likely output given an input.

Everything else — product branding, demos, dramatic headlines — sits on top of that basic fact.

Why this matters

A clear understanding of what an AI model actually is makes it much easier to cut through the noise that surrounds modern AI. When the basics are understood:

- dramatic claims become easier to evaluate

- “breakthroughs” can be judged on evidence rather than framing

- technical progress can be separated from corporate storytelling

- hype cycles become more transparent

AI systems are powerful, but they are often described in ways that exaggerate capability and minimise limitation. Knowing what these models really do — and what they don’t — provides a grounded foundation for interpreting every rumour, announcement, and supposed revolution that follows.

- For real-world examples of how AI misconceptions spread, see our latest Rumours.

- For strategic use of AI rhetoric, see our Investigation on “Sparks of AGI.”

Next in the Explanations series

- How Chatbots Actually Work Behind the Scenes

- What Training Data Really Means — And Why It Matters

- Why AI Hallucinates (And Why It Always Will)

- The Difference Between a Model and an Agent