What "Model Memory" Actually Means in AI

This explanation clarifies what model memory is, what it is not, and why the confusion persists.

A clear explanation of why large AI models don’t “remember” your chats the way people think they do.

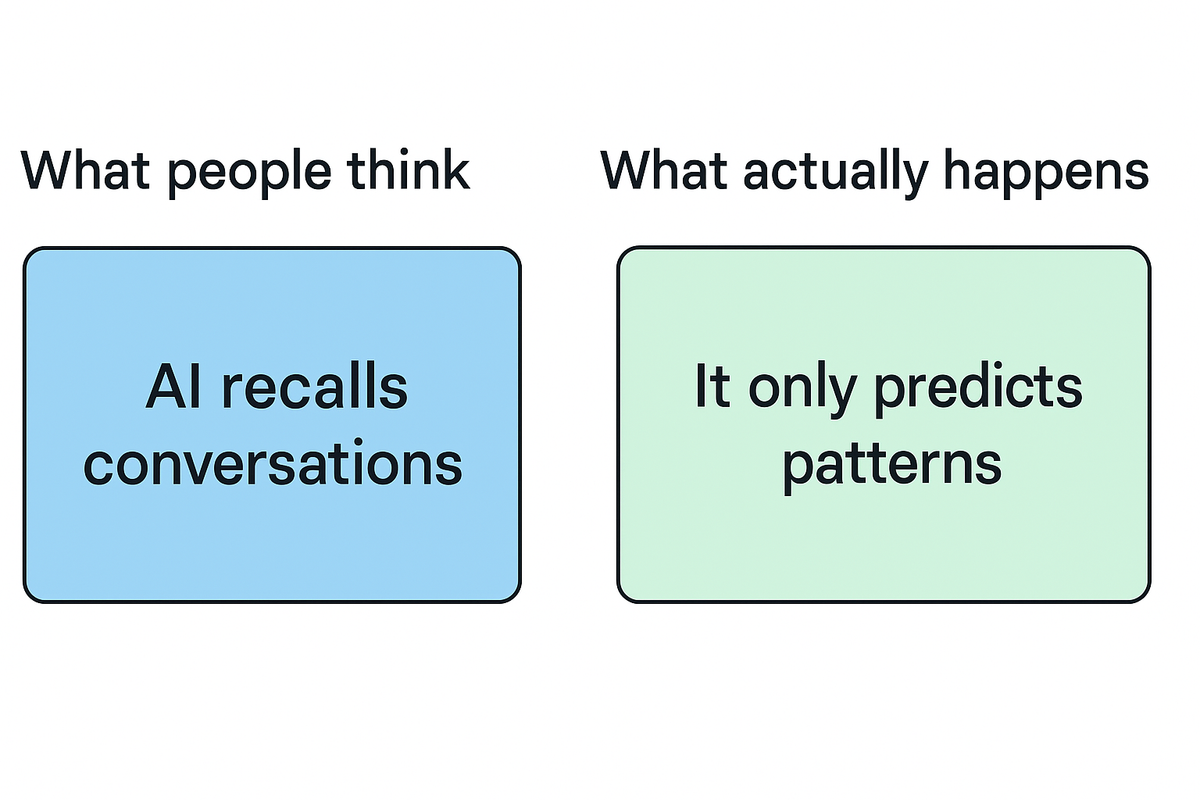

Many AI rumours arise from a basic misunderstanding: people think models “remember” conversations, users, or past chats. When an AI recalls something from earlier in the session, it’s easy to assume it has a long-term memory like a human — or that it is secretly storing information. Neither is true, and the misconception leads to rumours about spying, hidden databases, or “leaking memories.”

This explanation clarifies what model memory is, what it is not, and why the confusion persists. The goal is simple: remove the mystique and show the mechanical reality behind the behaviour.

What AI memory actually is

AI models do not store personal memories. They work entirely from context, which is the text you provide during the current session.

Examples:

- If you mention your dog’s name in message 1, the model can use it in message 5 because it is still in the context window.

- If you delete or refresh the chat, the “memory” disappears instantly.

- The model has no ability to recall earlier conversations unless the platform explicitly saves them (and that is a product feature, not a model behaviour).

The core idea:

AI does not remember — it just has access to whatever text is currently on the page.

AI memory is not human memory

This is where many rumours start.

People assume:

- If the model references something meaningful, it must “remember” them.

- If it adapts its tone, it must be learning.

- If it recalls a detail from earlier in the session, it must be storing it forever.

All incorrect.

Illustrative examples:

- A chatbot responding politely over time is not “learning your personality.” It is following patterns based on the text in front of it.

- If you return tomorrow and it acts as if it knows nothing, that is not “amnesia” — it’s how the system works.

- A model saying “As we discussed earlier…” is not remembering — it is reading.

The distinction is simple and sharp:

Context ≠ memory.

Where the confusion comes from

Rumours about AI memory tend to come from three places:

- Session context — users see continuity and mistake it for memory.

- Product features — chat apps sometimes save your conversation history, which the model can read if you reopen the thread.

- Anthropomorphism — humans are wired to project mental states onto anything that uses language.

AI models do not draw from a personal database about you. They do not recognise you, track you, or store details unless an external product system tells them to.

A short note on what they don’t draw from:

There is no hidden record of past conversations sitting inside the neural network. The weights contain statistical patterns from training — not your data, not your identity, not your chats.

Why this matters

Understanding the difference between context, training data, and memory helps cut through a huge percentage of AI rumours:

- Claims about “AI remembering me from last year” → false.

- Claims that models store your personal secrets → false unless a product explicitly logs them.

- Claims that AIs are developing “long-term memory” → incorrect; some platforms add memory features, but the model itself remains stateless.

Clarity here stops a lot of unnecessary fear and just as much hype.

Where to go next

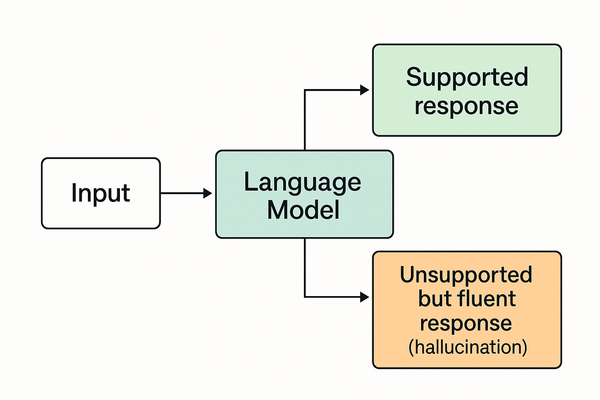

For a related Explanation, see What AI Hallucinations are - and what they aren't

For Rumours shaped by this misconception, see AI secretly storing everything