OpenAI secretly trained GPT on private emails, chats, and personal data.

A clear breakdown of why claims about OpenAI training on private emails and messages don’t hold up — and why this rumour keeps returning.

Claim: OpenAI trained GPT models on private emails, personal messages, and user data scraped without consent.

Verdict: False. There is no evidence that private user data was used for training.

Rumours that OpenAI “trained on private data” spread quickly online whenever a new model is released. The idea sticks because many people assume AI systems store or retrieve personal information, and the misunderstanding fuels a cycle of mistrust and speculation.

The Claim

The rumour alleges that GPT models were trained using:

- private emails

- chat logs

- personal documents

- cloud-stored files

It is often framed as “OpenAI scraped everything,” or “AI read everyone’s inbox,” without offering evidence or sources.

The Source

This rumour typically originates from:

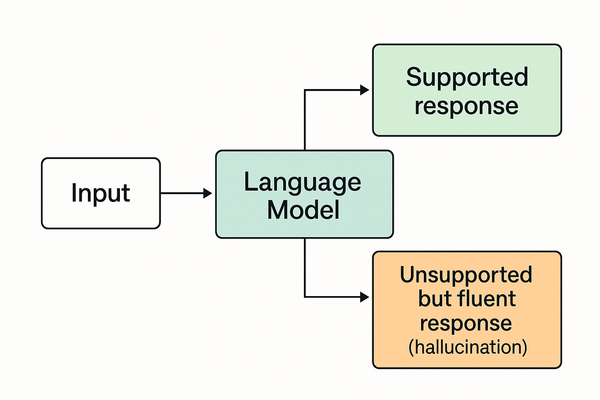

- misinterpretations of statements like “trained on the internet”

- confusion about how models handle context during a chat

- influencers and commentators amplifying fear-based narratives

- older misunderstandings resurfacing during new model launches

None of these sources provide credible documentation supporting the claim.

Our Assessment

Technical disclosures show that:

- GPT models are trained on publicly available data, licensed datasets, and human-generated material.

- Private user data is not included in training datasets.

- Chat inputs are excluded from training unless a user explicitly opts in (post-2023).

- Independent assessments have found no evidence of private inboxes, chats, or personal documents being ingested.

The rumour persists because AI behaviour is misunderstood — not because private data was used.

The Signal

This rumour reflects a broader pattern of:

- low public understanding of AI mechanics

- high emotional engagement with “AI is reading everything” claims

- distrust in tech companies

- viral misinformation shaped for attention rather than accuracy

It is a cultural pattern, not a technical one.

Verdict

Rumour Status: ❌ DEBUNKED

Confidence: 99%

OpenAI did not train GPT models on private emails, chat logs, or personal documents. The rumour spreads because it sounds plausible to worried users, not because it is true.

- For related context, see What “Model Memory” Actually Means in AI

- For broader pattern analysis, see Did OpenAI Train on Everyone’s Private Data?