NVIDIA is “testing a Blackwell-plus prototype that can generate new model weights without training,” claim leakers

Examining the rumour that NVIDIA is testing a Blackwell-Plus prototype capable of autonomously generating new AI models.

Claim: NVIDIA has a new chip prototype capable of generating entire AI models internally.

Verdict: Unproven — no evidence supports this capability; the leak lacks verifiable technical detail.

The rumour claims NVIDIA has an experimental successor to Blackwell that can “spontaneously generate optimised weight matrices” without training runs. Supposedly the chip just… invents them. As if SGD packed its bags and went home.

The Claim

According to unnamed “supply-chain whispers,” NVIDIA’s research division has been running internal evaluations of a next-generation Blackwell variant that uses an onboard “self-optimising architecture” to produce fully-formed model weights without conventional training.

The rumour insists the system can collapse training cycles from months to minutes by “emerging” optimal parameters directly from hardware-level search dynamics. In other words: the GPU dreams up an LLM.

The Source

As usual, the origin trail consists of:

– Anonymous Telegram channels posting blurry diagrams with no provenance

– “Friend of an NVIDIA engineer” anecdotes from Reddit

– Zero hardware specs, zero patents, zero logs

– Tech-hype influencers repeating each other’s claims without adding a single datapoint

There is no paper, no benchmark, no sign NVIDIA has reinvented optimisation itself. Just vibes.

Our Assessment

It is true that NVIDIA explores exotic accelerator designs, including non-traditional optimisation strategies, approximate search methods, and hybrid compute-memory topologies. All big labs do.

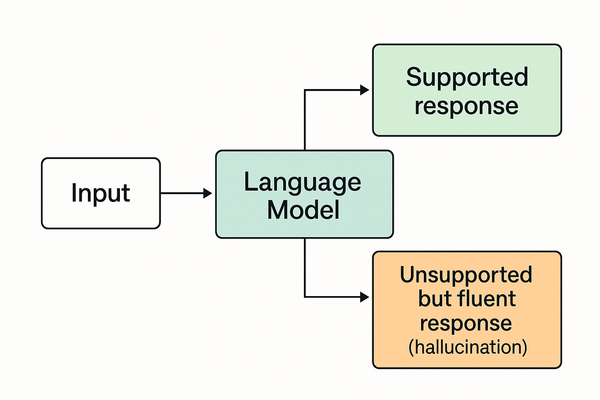

But nothing here lines up with real-world constraints:

– You cannot conjure good weights without training data

– Hardware does not “emerge” semantic structure

– Gradient descent is not optional because someone on Telegram got excited

– Even model distillation requires a trained teacher

– Weight-space search is astronomically large without guidance

Could NVIDIA be experimenting with accelerators that massively speed up training loops? Absolutely. Do they have a chip that invents weights out of thin air? No.

This rumour confuses “next-gen optimisation research” with “the GPU became sentient and did the work for us.”

The Signal

The real takeaway is simpler:

– Hardware vendors are racing to accelerate optimisation, compression, and fine-tuning

– Expect aggressive hybrid architectures over the next few years

– Training bottlenecks are shifting from compute to memory movement and coordination

– Companies are desperate to reduce the cost curve of frontier models

But none of that implies “training-free LLM creation.” It implies better tools, not magic.

Verdict

Rumour Status: ❌ Hardware Alchemy

Confidence: 97%

There is no universe where NVIDIA cracked “weights without training” and decided to leak it through Telegram cartoons. The rumour is fun sci-fi, nothing more.

- For background on how models are built, see How Chatbots Work.

- For how companies position new hardware, see The Benchmark Game.