Meta is “testing a secret LLM that can read minds through keyboard patterns,” claim leakers

Assessing claims that Meta is developing an LLM capable of inferring thoughts from keyboard behaviour and typing patterns.

Claim: Meta has developed an LLM that can read users’ thoughts from typing behaviour.

Verdict: Misleading — keystroke analysis can indicate patterns but cannot infer thoughts; the claim is heavily overstated.

Meta, we are told, has quietly built an internal model that can “read your thoughts” by watching how you type. Not what you type. How. The claim: your pauses, corrections, and key rhythm are apparently enough for a secret LLM to peer directly into your brain. Sure.

The Claim

According to “people familiar with the matter,” Meta is running internal tests of an experimental LLM that can infer a user’s thoughts and emotional state purely from keyboard dynamics — timing, rhythm, corrections, pressure, and other behavioural signals.

The rumour goes further: this system allegedly reconstructs “intended text” the user never actually typed, and infers sensitive attributes (political lean, relationship stress, mental health, etc.) in real time.

The Source

Vague “leaker” accounts and anonymous posts summarised as:

– “Meta researcher” anecdotes passed via third-hand DMs

– Screenshot-free claims on Twitter/X and Reddit

– Low-effort tech commentary threads that repeat those claims without evidence

– No code, no paper, no demo, no logs, no technical detail beyond buzzwords like “multimodal” and “latent intent vectors”

In other words: a lot of storytelling, zero artefacts.

Our Assessment

Keystroke dynamics as a behavioural signal is real. It has been studied for:

– Authentication (biometric-style “typing fingerprint”)

– Basic affect detection (stress, fatigue, cognitive load)

– Coarse behavioural patterns over time

But that is a long way from:

– Reading specific thoughts

– Reconstructing “what you meant to type but didn’t”

– Diagnosing detailed political, emotional, or mental states in real time

Even a very powerful model is still limited by its input signal. Keyboard metadata is low-bandwidth, noisy, and heavily context-dependent. Without full text, screen content, history, and environment, you’re not getting “mind reading.” You’re getting statistical guesswork with a confidence problem.

Could Meta be experimenting with richer behavioural models combining keystrokes, scroll behaviour, app usage, and content? Absolutely. They would be negligent not to explore that for ranking and safety systems. But the jump from “behavioural modelling” to “LLM can read your thoughts from your typing” is classic hype inflation.

The Signal

Behind the nonsense, there are a few real signals:

– Big platforms are absolutely interested in fine-grained behavioural telemetry

– Keystroke and interaction patterns are being studied as inputs for fraud detection, safety systems, and personalisation

– Regulators are nowhere near ready for the privacy implications of large-scale behavioural inference models

The real story is not “mind-reading LLMs.” It’s that the line between “engagement optimisation” and “behavioural profiling” keeps getting blurrier, and the incentives are all pointing in the same direction.

Verdict

Rumour Status: ❌ Keyboard Phrenology With Extra Steps

Confidence: 98%

This is classic tech folklore: start with a real research area (keystroke dynamics), bolt on maximal surveillance anxiety and LLM buzzwords, and you end up with “Meta can read your mind from your typing.” No, it can’t. It can barely read your mood without a mountain of extra data — and even then, it’s guessing.

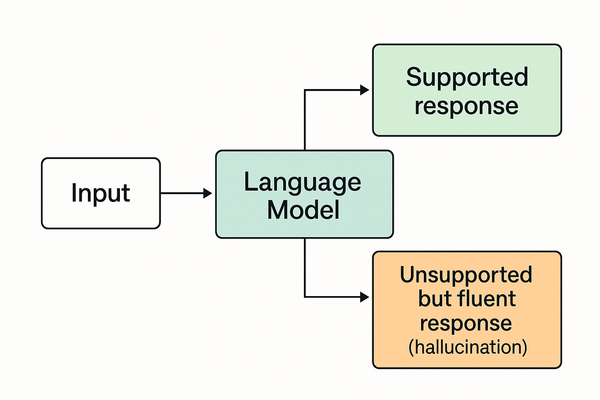

- For background on model functionality, see How Chatbots Work.

- For comparison, see Rumours on Llama 4 and autonomy claims.