Is GPT-5 Showing “Near-Human Reasoning”? Claims of Accelerated Training Examined

A circulating rumour claims GPT-5 has shown “near-human reasoning,” prompting OpenAI to accelerate its timeline. There is no evidence supporting these claims.

Claim: OpenAI is accelerating GPT-5 because internal testers achieved near-human reasoning.

Verdict: No credible evidence that GPT-5 has demonstrated near-human reasoning or that OpenAI has altered its training schedule in response.

A rumour circulating across X, Reddit, and AI Discord groups suggests GPT-5 has shown early signs of “near-human reasoning,” prompting OpenAI to accelerate its development timeline. The claim has generated excitement and speculation — but very little verifiable information.

The Claim

According to the rumour, internal testers allegedly observed GPT-5 performing complex reasoning tasks at a level approaching human capability. Supporters claim this discovery caused OpenAI to intensify training efforts and move up internal deadlines. Variations of the rumour reference “private benchmarks,” “insider reports,” or vague comments about testing breakthroughs.

None of these assertions are accompanied by documentation, citation, or confirmation from reputable sources.

The Source

Most versions of the rumour trace back to:

- Anonymous posts on Reddit and Discord

- A handful of high-engagement X accounts known for amplifying unverified model-leak speculation

- Misinterpreted job listings mentioning “next-generation capabilities”

- Comments taken out of context from unrelated podcast appearances by OpenAI researchers

No statements from OpenAI — nor from researchers with direct access to internal evaluations — support the claims.

Our Assessment

There is currently no evidence that GPT-5 has displayed “near-human reasoning.”

Such a milestone would require:

- Transparent benchmark data

- Reproducible evaluations

- Multiple independent reviewers

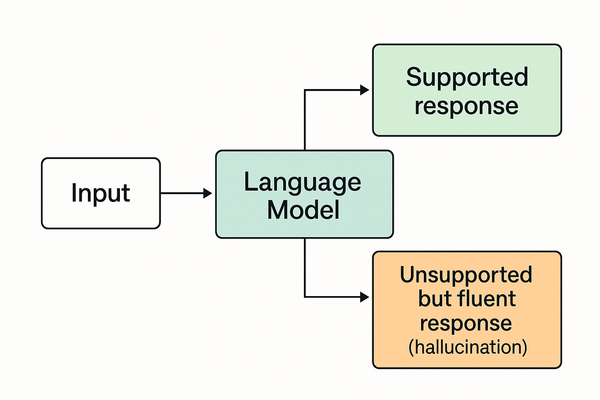

- Clear distinctions between reasoning, pattern generalisation, and plausible-but-wrong outputs

To date, OpenAI has confirmed none of these.

Additionally, model development timelines are influenced by resource constraints, safety audits, organisational priorities, and compute availability — not just performance surprises.

The assertion that OpenAI “accelerated” development is speculative and inconsistent with how large-scale model training operates.

The Signal

This rumour highlights several predictable patterns in AI discourse:

- Breakthrough bias: People favour narratives of sudden leaps toward AGI.

- Opaque development cycles: Limited transparency invites speculation.

- Influencer amplification: High-follower accounts drive rumour spread regardless of evidential basis.

- Conflation of abilities: Strong performance on selective tasks is mistaken for general reasoning capability.

The rumour reflects hype mechanics, not confirmed progress.

Verdict

Rumour Status: ❓ Unverified

Confidence: 90% (based on lack of evidence, unreliable sources, and typical rumour dynamics)

OpenAI has provided no indication that GPT-5 possesses near-human reasoning or that the company has accelerated its internal timelines. The rumour depends on anonymous claims, misinterpretation, and wishful thinking — not documented performance.

- For related context, see: Did OpenAI Train on Everyone’s Private Data?

- For broader pattern analysis, see: The “Sparks of AGI” Autopsy