How chatbots actually work behind the scenes

A clear explanation of how chatbots work behind the scenes by converting text into tokens and predicting the most likely next output.

Chatbots can appear mysterious from the outside — sometimes eerily fluent, sometimes unexpectedly wrong, and often wrapped in marketing language that implies far more than they do. But behind the curtain, the mechanism is simple, mechanical, and almost disappointingly unmagical.

Here is what is actually happening when you talk to a modern AI chatbot.

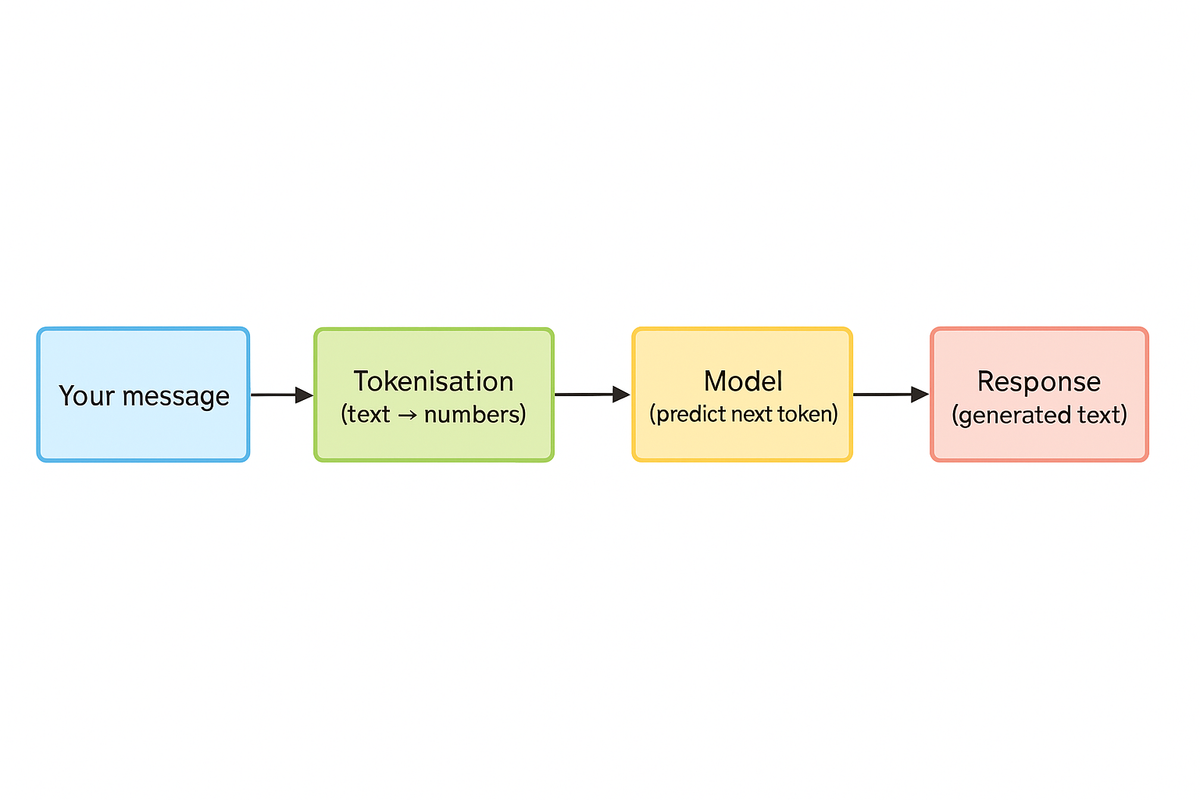

Your message becomes numbers

When you type a message, the chatbot doesn’t “read” it the way a human does.

Instead, your text is converted into tokens — numerical pieces of information representing fragments of words.

For example, “reasoning” might become a sequence of several tokens.

Everything that follows operates entirely on numbers.

The model predicts the next most likely token

The chatbot does not think, plan, introspect, or understand.

It performs one operation repeatedly:

Predict the next token.

Then the next one.

And the next.

One prediction at a time.

A conversation that looks like a deep discussion is, underneath, a chain of extremely fast probability calculations.

Context is the only “memory”

The model does not store memories between conversations.

It only sees the conversation so far in its context window — a temporary working buffer.

If the conversation is long enough, the earliest parts fall out of memory.

If the context is well-structured, the model performs better.

If it’s chaotic, the model drifts.

There is no hidden long-term narrative, no stored personality, no internal diary.

Only the provided text.

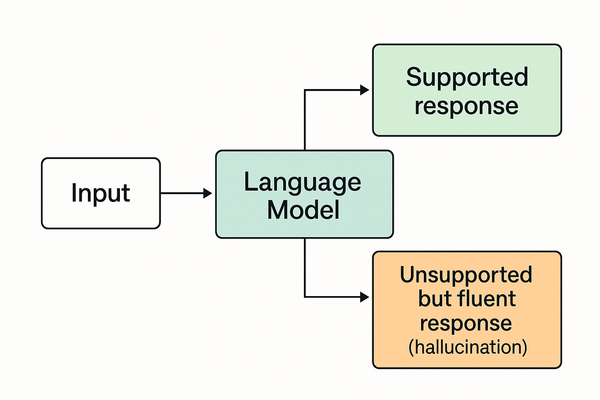

No facts — just patterns

Chatbots do not hold factual databases in the traditional sense.

They have patterns encoded in their parameters: statistical relationships between words and concepts.

If you ask:

“What is the capital of France?”

the patterns strongly favour “Paris.”

If you ask:

“What was the name of the dog owned by the 19th-century astronomer in Bavaria?”

the model has no pattern to rely on — so it guesses.

Sometimes elegantly.

Sometimes absurdly.

Hallucination isn’t a fault — it’s inherent to prediction.

Reinforcement and fine-tuning shape behaviour

Before you ever speak to a chatbot, it has already been refined through:

- supervised training (showing examples of good responses)

- reinforcement learning (rewarding preferred behaviours)

- safety tuning (discouraging harmful or unacceptable outputs)

This doesn’t give the model morality or values.

It gives it statistical pressure to behave in certain ways.

Polite, helpful, cautious chatbots are a product of fine-tuning, not personality.

The interface is theatre — the model is maths

Typing bubbles, friendly wording, “I” statements, and structured replies are all interface decisions designed to make interaction smoother.

Behind that interface, the model is still:

- a set of numbers

- performing token prediction

- guided by training

- unaware of itself or you

The expressiveness is engineered. The mechanism is mathematical.

So how do chatbots actually work?

In one sentence:

A chatbot is a large language model predicting the next most likely chunk of text, guided by training and wrapped in an interface that makes the output feel conversational.

Everything else people assume — memory, understanding, reasoning, personality — is an illusion created by highly sophisticated statistical patterns.

Why this matters

Understanding how chatbots really work helps readers:

- distinguish between capability and appearance

- recognise when a system is guessing rather than knowing

- interpret “reasoning claims” with scepticism

- understand why chatbots sound confident even when wrong

A grounded view makes it easier to judge claims, rumours, and corporate rhetoric about what these systems supposedly can or cannot do.

- To see how misunderstandings about AI behaviour turn into headlines, explore our Rumours section.

- For how these narratives are used strategically, see our Investigation on benchmark framing.