DeepSeek’s next model will “solve NP-complete problems in real time,” claim leakers

Assessing the rumour that DeepSeek’s next model will be able to solve NP-complete problems in real time.

Claim: DeepSeek’s upcoming model can instantly solve NP-complete problems.

Verdict: False — no AI model can solve NP-complete problems in real time; the rumour contradicts established complexity theory.

According to the latest round of hallucinating leaker accounts, DeepSeek’s next model isn’t just faster — it’s allegedly about to crack the kind of computer science problems that have resisted decades of actual research. Yes, apparently an LLM is going to casually rewrite computational complexity theory on a Tuesday afternoon.

The Claim

Certain “insider” accounts insist that DeepSeek’s upcoming model has achieved real-time solutions to NP-complete problems — portfolio optimisation, protein folding, logistics routing, you name it — all supposedly with deterministic accuracy and zero approximations.

The Source

A slurry of unverifiable posts summarised as:

– Anonymous “researcher” DMs

– Screenshot-free hype threads

– Generic AI influencer YouTube rambles

– Zero technical details beyond buzzphrases like “quantum-inspired,” “hyper-solver,” and “emergent computational shortcuts”

In short: claims built on vibes, not evidence.

Our Assessment

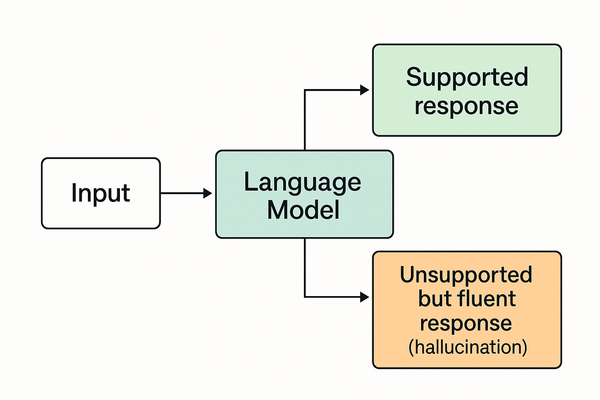

LLMs can produce plausible-looking answers to NP-hard problems because they memorise patterns, heuristics, and typical solutions. That is not the same thing as solving them — especially not with provable optimality in real time.

There is no architecture change, scaling trick, or training magic in publicly known AI research that collapses NP-complete problems into polynomial time. If DeepSeek had actually done this, they wouldn’t be teasing it through Twitter randos; they’d be rewriting the global economy and collecting every major scientific award at once.

The Signal

The only real signal buried under the hype:

– Labs are pushing LLMs harder into optimisation tasks

– Better heuristics are emerging because scale gives models more patterns to exploit

– Companies want investors to believe “AI will fix everything automatically”

But none of this equals “NP-complete problems solved.” It equals “people who barely passed CS101 repeating words they don’t understand.”

Verdict

Rumour Status: ❌ Computational Comedy

Confidence: 97%

This rumour is what happens when someone hears “LLMs can approximate optimisation problems” and decides that means “LLMs have overturned half of theoretical computer science.” They haven’t. They won’t. And anyone claiming otherwise is either confused or marketing.

- For foundational limits of model behaviour, see What Is an AI Model, Anyway?

- For how extreme capability claims spread, see The Benchmark Game.