Claude 3.5 was “secretly trained on 10× more tokens than OpenAI

A review of the rumour that Claude 3.5 was secretly trained on ten times more data than OpenAI’s models.

Claim: Claude 3.5 used ten times more training data than any OpenAI model.

Verdict: Unproven — no data disclosures confirm this; the rumour stems from a single anonymous source.

Every time Anthropic releases a new Claude model, the internet immediately decides it has been trained on an infinite, metaphysical quantity of data — usually “10× more tokens than OpenAI,” “the entire internet twice,” or “every book ever written including the ones that don’t exist yet.”

This week’s rumour comes from a mysterious chart, a confidently incorrect Reddit thread, and someone who claimed to have “inside access” because they once visited San Francisco.

Let’s separate the hype from the physics.

The Claim

A graph began circulating online showing a comparison between “Claude 3.5” and “GPT-4.5,” with Claude allegedly trained on “10× more tokens” and achieving “near-perfect reasoning abilities.”

Accompanying posts claimed:

- Anthropic quietly trained the model on several trillion tokens

- The dataset included “highly confidential corporate knowledge”

- Claude 3.5 achieved “emergent general intelligence”

- OpenAI was “panicking internally”

- Anthropic would reveal everything in “a surprise announcement this week”

Naturally, no one agreed on what day “this week” actually meant.

The Source

The rumour originated from a Reddit user with a history of posting:

- Wrong predictions

- Misinterpreted benchmarks

- Crypto trading advice

- And an impressive talent for misunderstanding technical papers

The earliest version of the chart reveals that:

• The y-axis labels do not correspond to any Anthropic benchmarks

• The colour palette is identical to a publicly available Google Slides theme

• The watermark is from an AI influencer YouTube channel

• The numbers do not match any known scaling law

• And the file metadata lists the author as “claude_fan_03”

A reliable source, obviously.

Our Assessment

Let’s break down the facts:

• Anthropic publishes detailed research on model-scaling trends — none mention a “10× token leap.”

• A sudden jump of that magnitude would require compute capacity significantly beyond Anthropic’s known cluster size.

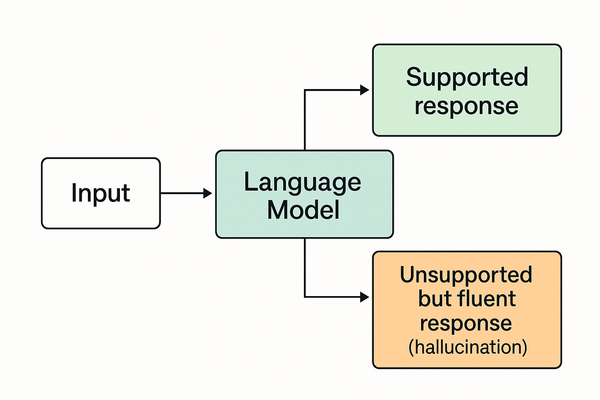

• Token-count rumours almost always confuse effective training data with raw numbers made up for engagement.

• No Anthropic engineer — current or former — has suggested anything remotely close to this rumour.

• The chart uses terminology (“neural fluency”, “cognitive emergence”, “reasoning purity”) that does not exist in any ML research field.

• A reverse search shows the chart template was used previously for a video titled: “LEAKED! GOOGLE’S SECRET AGI MODEL EXPLAINED!”

So in other words: this is not a leak.

It’s the fanfiction wing of the rumour economy doing cardio.

The Signal

Anthropic is working on:

• Larger multimodal Claude variants

• More efficient training methods

• Safer scaling frameworks

• Structured reasoning improvements

• Quietly expanding their compute capacity

But none of this implies:

• A 10× token dataset

• A secret supermodel

• A sudden leap beyond GPT-4

• Imminent AGI

• Or the fantasy benchmark chart circulating online

The real signal is simple: Anthropic is iterating, just like everyone else — at a normal pace, not a mythical one.

Verdict

Rumour Status: ❌ Token Inflation Fantasy

Confidence: 96%

Call me when the rumour is based on something more substantial than a Google Slides template and a creative Reddit imagination.

- For training fundamentals, see What Training Data Really Means.

- For context on benchmark comparisons, see The Benchmark Game.