AI Models Secretly Store Everything Users Type, Claim Critics

A common claim suggests AI models secretly store everything users type and can recall it later. There is no evidence this is how modern language models work.

Claim

AI models secretly store everything users type and can later recall private conversations, even when users believe chats are temporary or deleted.

Verdict

False.

There is no evidence that modern language models retain or recall user conversations as persistent memory.

A widely circulated claim suggests that AI systems quietly retain everything users type, creating a hidden store of personal data that can later be accessed or recalled. The claim is often raised in discussions about privacy, hallucinations, or fears that models are continuously learning from live conversations.

The Claim

The claim holds that AI chatbots and language models:

- store full records of user conversations internally

- retain those records across sessions

- can later recall or reuse private prompts and replies

In its stronger form, the claim implies that this storage is hidden from users and operates regardless of stated privacy policies.

The Source

This rumour typically originates from:

- social media commentary and forums

- speculative blog posts and videos

- misinterpretations of hallucinated responses

It is rarely supported by primary technical documentation and is often repeated without reference to how language models are actually built or deployed.

Our Assessment

Modern language models do not possess persistent, user-specific memory.

During a conversation:

- inputs are processed within a temporary context window

- responses are generated probabilistically

- the context is discarded when the session ends

Models do not retain transcripts for later recall.

They cannot “remember” individual users or past conversations.

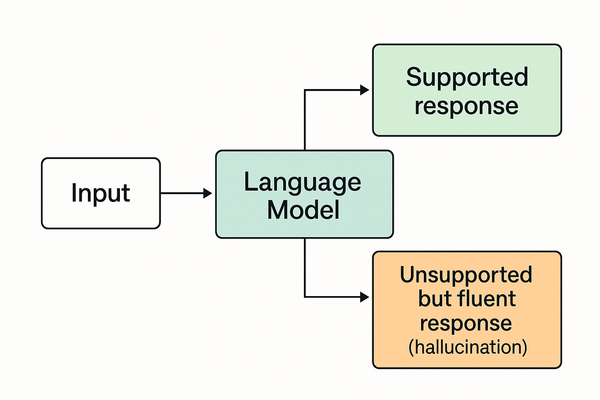

Confusion arises when:

- hallucinated outputs are mistaken for memory

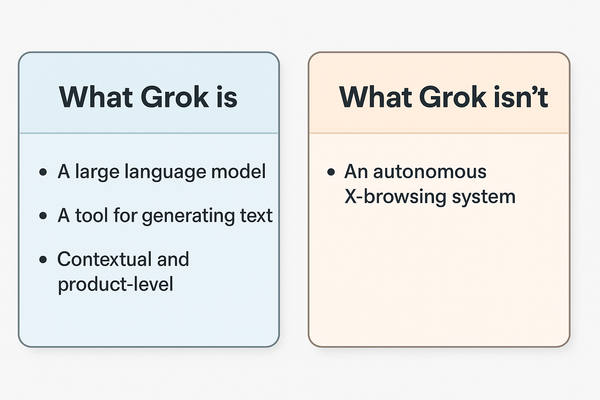

- product-level logging is conflated with model behaviour

- training processes are misunderstood as continuous ingestion

Logging, where it exists, is handled by the service infrastructure — not by the model as recallable memory.

The Signal

This rumour reflects a broader pattern in AI discourse:

- fluent output is mistaken for knowledge or recall

- opaque systems invite assumptions of hidden behaviour

- privacy concerns are redirected toward the model rather than the platform

The persistence of this claim shows how easily architectural details are lost in public discussion, allowing fear-based narratives to spread faster than technical clarification.

Verdict

Rumour Status: ❌ Debunked

Confidence: 99%

Language models do not secretly store or recall everything users type. The claim rests on a misunderstanding of how conversational context, logging, and hallucinations work — and does not match observed system behaviour or published technical descriptions.

- For related context, see What AI Hallucinations Actually Are — and What They Aren’t

- For broader pattern analysis, see What “Model Memory” Actually Means in AI